About

AS-Docker is a way to work with one or many Docker projects

locally or on the VPS

with all the advantages of native projects setup - see the Features for more

details.

Let's join to the one, common and easy to setup Docker environment for all

the team members: back-end, front-end and dev-ops

guys

working on any OS: Mac, Windows or Linux!

The challenges

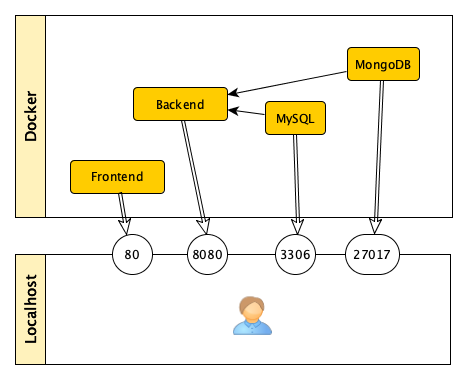

Ports confusion

If you have a regular Docker project, you have to map all the container

ports to your localhost, to be able to connect them directly.

But.. some of the developers have their own, native MySQL instance

installed locally, so they changed the MySQL port from

But.. some of the developers have their own, native MySQL instance

installed locally, so they changed the MySQL port from 3306 to

3307.

Other ones have more than 1 project installed and they need a custom

Frontend port, so they changed 80 port to 81.

But yet other developers can’t run Mongo on the default port,

so they want to change it as well. It's a real headache for the development team!

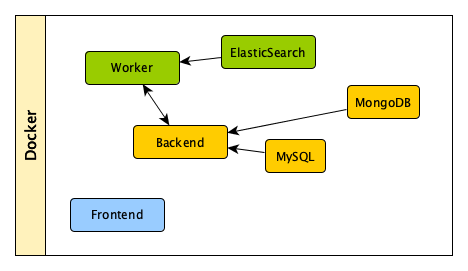

Multi-projects environment

There is a common case on the real environment to have many microservices (a separate projects/directories) under the single stack.

When we create a new project and we add an ElasticSearch and a Worker app inside,

it’d be great to communicate somehow the Backend with Worker or ElasticSearch.

By default, the external_link param can be used, but then we can’t

create a reverse communication. In other words we can request

Worker from Backend, but we can’t request Backend

from Worker.

Why? The

Why? The external_link creates a Docker dependency, so once

you setup the head project, all the “dependency” projects start before the head. It’s great

for

one-way communication (parent and children project strategy), but it just doesn’t

work if you want two-way communication between the projects.

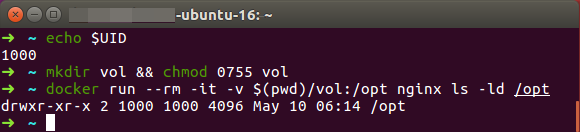

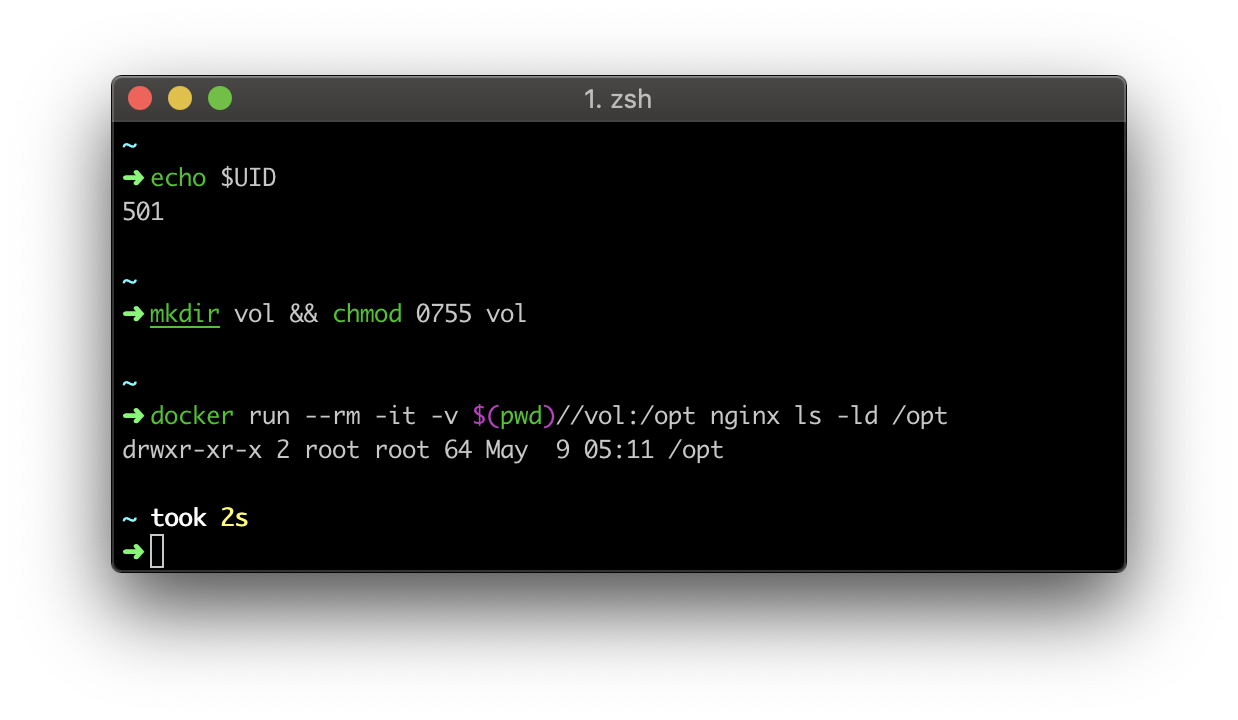

"permission denied" problem

When you build and deploy Docker image, you can manage all the files and directories inside

(set a proper permission), but for local development, where the volumes are

used, you can rely only on the operating system’s rules, but.. they are

different for each of operating systems. What is the result? One configuration works for

Linux but throws an error for MacOS or Windows, but a

separate one works for Windows but crashes on Linux.

Local work with Docker was painful from the very beginning. There are many

well known issues and even Docker team doesn’t have a one recommended solution for that. We

were forced to collect up the Docker community workarounds and solutions and implement

the best of it into the AS-Docker.

AS-Docker - basic concept

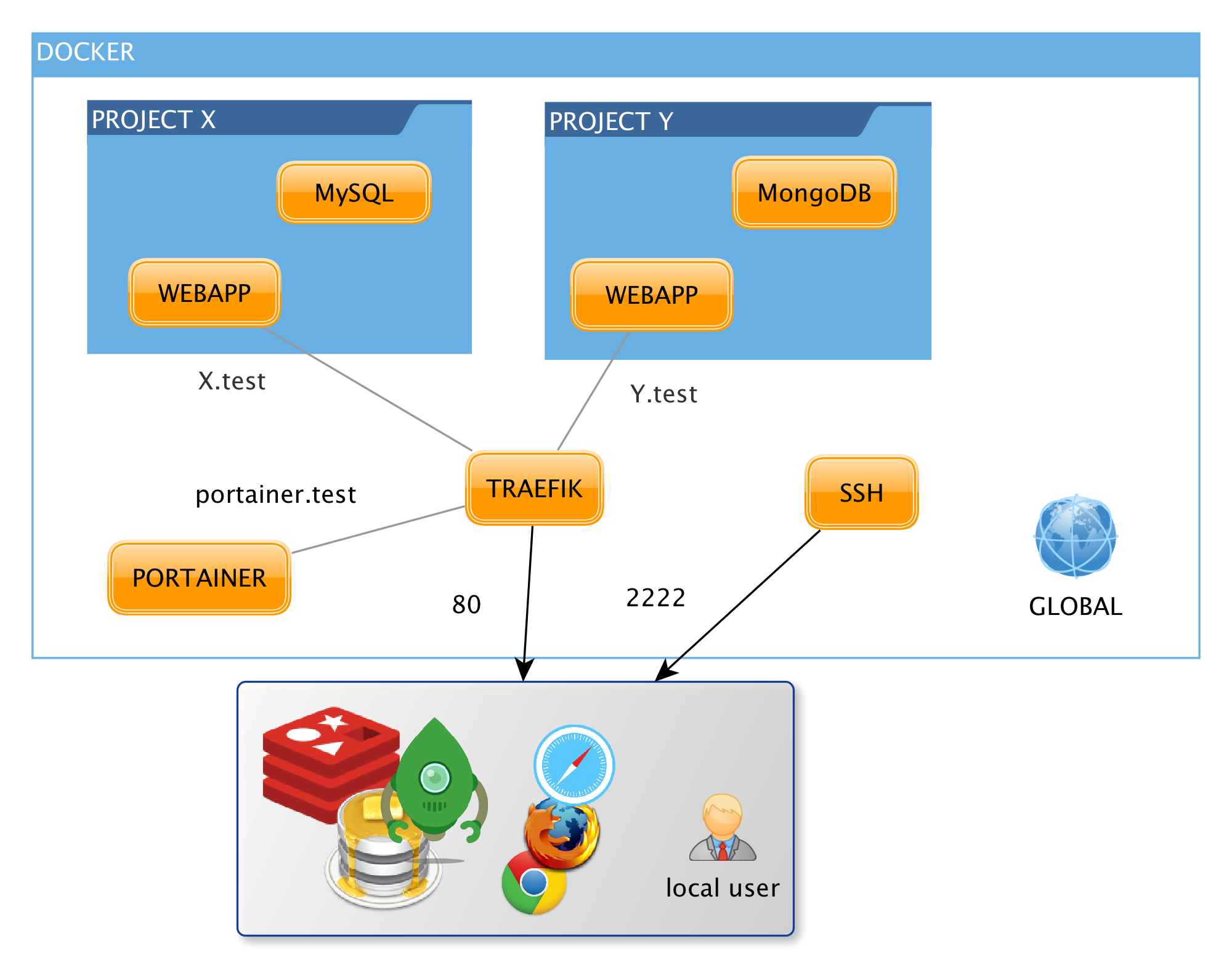

To meet the development team expectations, we have created a solution to handle many projects under one local machine. The main goal was to enable two-way communication between all the containers, remove ports mapping and the most important one: do not use any external tools or other applications, only a pure Docker environment.

We have started by adding one global project called Environment. We decided to

use Traefik

as a global proxy for all web-based containers. Traefik container is mapped on local

80 port and it recognises the Host header to pass the request to the proper Container.

The next step was to create a SSH tunnel for all the containers.

We decided to use a simple SSH app,, with the ability to reach all other containers by their names.

We have mapped it to local port 2222 and as a result,

we can connect all the non-web containers like MySQL, Mongo or Redis

via this tunnel.

But, one more important issue has been left. Containers should be able to connect with

each other with no link or external_link params.

Thanks to one of the latest Docker features called “native routing”, now it’s made easy!

All the containers have to belong to a one global network - let’s call it

global. Then the container_name becomes a Docker internal host of the container.

As long as any other container is used under the same network, it can connect to the other container via the container’s name as a host.

All the *.test domains are pointed to localhost. There are accessible

via the Browser. Global network handles all the *.docker hosts inside the containers.

Both: *.test and *.docker name are an AS-Docker standard only.

Why *.test but not a *.local or *.dev ?

All the popular “developers” global domains like local, dev or app are problematic due to

global domains release in 2018 and 2019, see:

.dev release or

.app release. Moreover, some local and localhost names may not work properly in some operating systems

(see .local domain issues).

We’ve decided to use recommended, suggested by the community, logical and the simplest names.

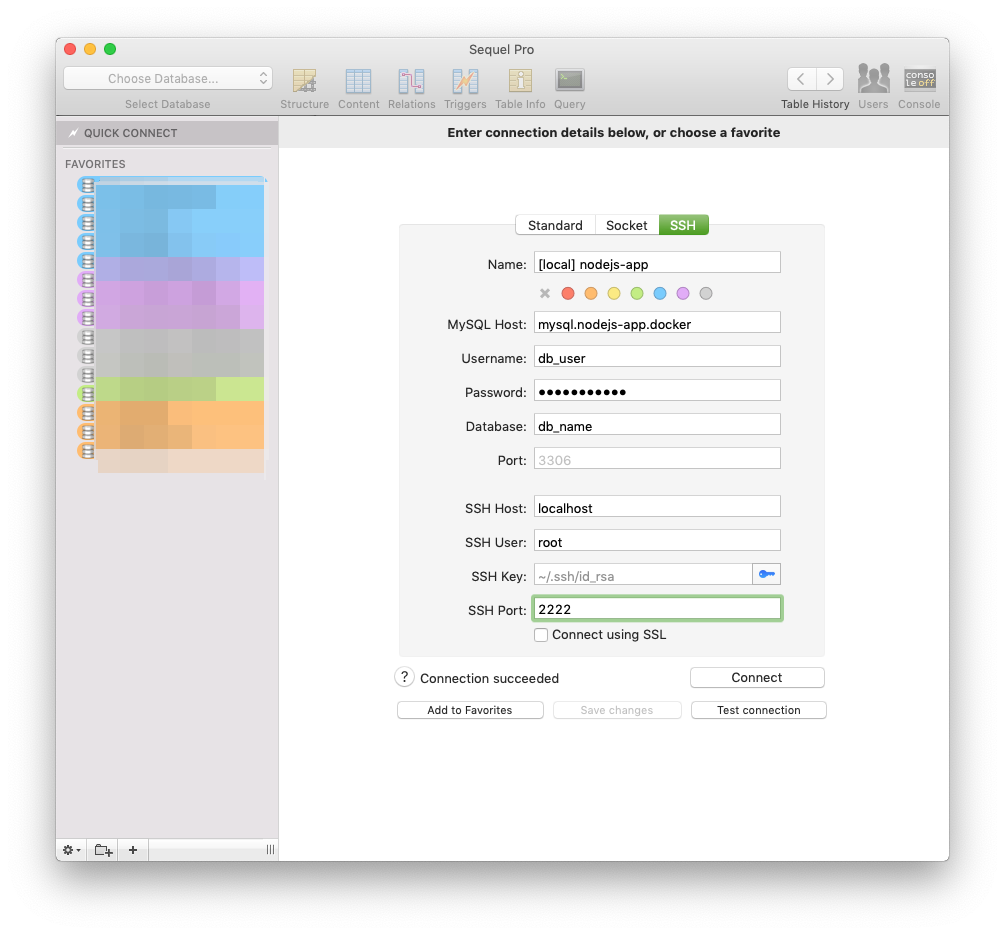

To reach the MongoDB or MySQL through the local GUI (like MongoDB

Compass), we have to connect via root@localhost:2222 tunnel, using mysql.docker

or mongo.docker as a host.

See Demo section for more details.

Each of the projects is encapsulated now, it doesn’t map any ports, no external_link

is needed and the development team has one the same stack, no matter how many other different projects they’re working on. Now the developers can be focused on the code instead of struggling with finding a correct way how to set up and maintain the project.

A deeper insight

Local aliases system

webapp container with some backend stuff. To enter

the CLI Backend tool, we can use docker-compose script:

docker-compose exec webapp bashdocker-compose exec webapp python ./manage.py users:create./manage.py script, we have to type the whole

exec command. It’s definitely not something developers love.

They would like to have a manage local alias to execute such tasks.

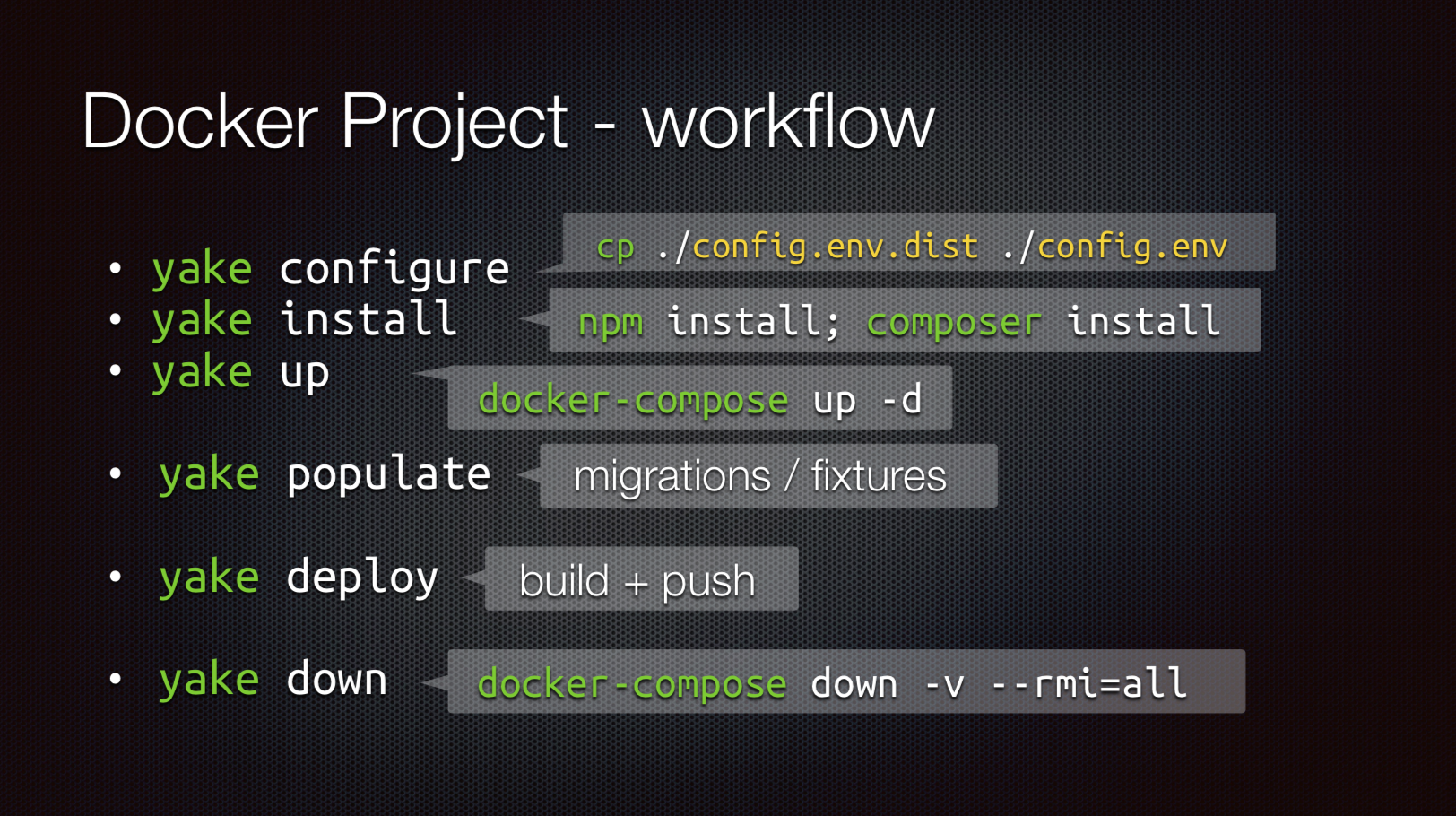

The solution was not as easy as we thought. Nevertheless, we’ve managed to create a dedicated but still simple tool called: Yake - manage project aliases.

We define aliases in the local

We define aliases in the local Yakefile YAML file, then run them via the yake

command.As a result we can run:

yake manage users:create

The next step was to unify all the most used commands into the yake

aliases.

Docker-compose unifications

global network.

Traefik labels should contain the hosts under the *.test domain and container

names should be under the *.docker domain. To make it work, we’ve created a

docker-compose.yml template for all the projects.

Then we decided to keep all the container’s volumes like database storage on

~/data in container_name subdirectory. For example the MySQL:

volumes:

- ~/data/mysql.{name}.docker:/var/lib/mysqldocker-compose-local.yml

or docker-compose-prod.yml. For the basic usage, all the container environments

are controlled only by ENVs under the one Docker image.

Project files structure

docker

directory for all container’s data like build or config files. The deploy

directory has been intended to store staging, test and production environment

files. We’ve also decided to use one docker-compose.yml per project.

.

├── README.md

├── docker-compose.yml

├── Yakefile

├── deploy

│ ├── prod

│ │ └── docker-compose.yml

│ └── rancher

│ └── docker-compose.yml

└── docker

├── mysql

│ ├── config.env

│ └── config.env.dist

└── webapp

├── config.env

├── config.env.dist

└── DockerfileMounted volumes policy

chmod-s which means file or directory permissions. For example,

root user can write to any file, but a regular user is allowed only to read it.

But for

Windows based file systems, this mechanism just doesn’t

work, because as a separate OS, windows has it own permission approach. When we

mount a directory from Linux to Docker, the permission "works as expected".

The same stack run on

Windows or MacOS, may crash the webapp due

to “permission denied”

error.

To solve the permission issues with mounted volumes, we’ve used an entrypoint

feature. If the container uses their own non-root user and mounted volume has non-root

UID (Linux), then the container’s user gain a new UID

from volume - it becomes an owner of the volume. But if the volume is root owned

(Mac, Windows), the run command is executed as a

root (grant root permissions).

The whole “permission” trick is encapsulated into the base image and the project’s

Dockerfile can use own CMD and ENTRYPOINT

params. The good point is that we have one image build for all the operating

systems with no “permission denied” issues :)

Features

SSH Tunel

Direct access to any Container from your local machine, using

container_name as a host.

Traefik

Work with many projects simultaneously, including local, dev and prod mode of the same app or even various versions of the same app

Native routing

Communicate many micro-services with each other. Use you API from Front-end. All with no additional configuration.

Yake

One way to setup project, including dependency install, migration

and fixtures.

No matter the technology: Python, PHP, NodeJS or Angular/React.

Zero port mapping

Forget about mapping your container's port to your local machine to gain direct access or enable connection between containers. Just keep each of project isolated.

Unification

Unified project structure for all Docker stuff files. Start develop project with no time waste for Docker configuration.

Best practices

Be sure your project will work the same way after the Docker

upgrades.

Thanks for keeping the Docker Best Practices, AS-DOCKER is just a stable solution.

Easy usage

One simple documentation with examples for all team members: Front-end, Back-end and Dev-Ops.

Get started

Requirements

Software

AS-Docker can be used on any operating system you want:

Windows, MacOS or Linux.

Default Docker CE setup is required, along with docker-compose

tool.

Also, make sure you do not use 80 and 2222 ports

on your local.

Yake

Quick HOWTO:

curl -L https://cpanmin.us | perl - App::cpanminus

curl -L https://yake.amsdard.io/install.sh | sudo -E bash

yake --helpSSH keys

You can use Creating SSH Keys article to

generate your keys. Put it into the default ~/.ssh path.

Local DNS wildcard

Environment - local

- Create a new directory

~/Projects. - Clone

local/directory from as-docker/projects-environment repository into your local~/Projects.git archive --remote=git@bitbucket.org:as-docker/projects-environment.git HEAD local/ | tar -x --strip=1 -

Create Docker

globalnetwork.docker network create global -

Setup the stack.

docker-compose build --pull --build-arg ID_RSA_PUB="$(cat ~/.ssh/id_rsa.pub)" docker-compose pull docker-compose up -d --force-recreate -

Run http://portainer.test/ (optionally add

portainer.testto your/etc/hostsif you don't usednsmasq) to check if your local stack works fine.

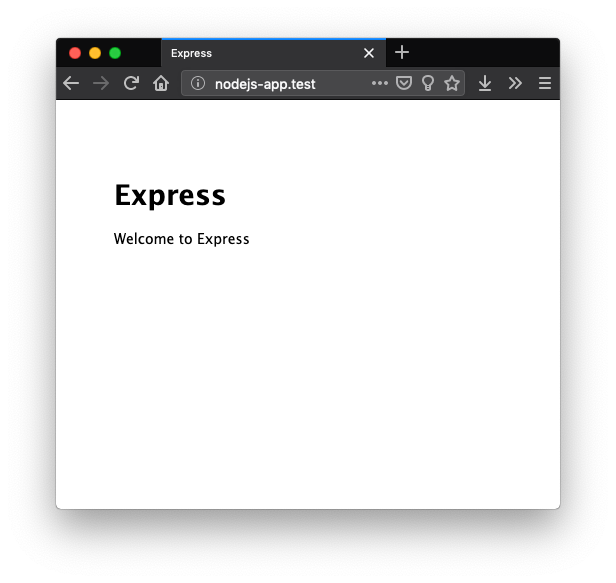

Project setup (NodeJS app)

-

Make sure you have NodeJS (LTS) installed. Then go to

~/Projectsdirectory.

-

Create a new project.

npm install express-generator -g express --view=pug nodejs-app cd nodejs-app npm install -

Add the nodejs-as-docker library.

npm i nodejs-as-docker ./node_modules/nodejs-as-docker/setup -

Setup the Docker stack.

yake configure yake up -

Open http://nodejs-app.test/ to check how it works.

Now you can enter theMySQLandRedisvia your local GUI,

using the SSH Tunnelssh -p 2222 root@localhost.

When you want to request theWebappcontainer from the other project, just usewebapp.nodejs-app.dockeras a host. -

You can build and push the container's image by:

yake deploy webapp -

When you stop working on the project, just run:

yake down

Installers

They write about us

AS Docker - Complete approach for Docker development

blog platform name

As quick as Docker became a popular deployment engine, it started to be used for local development as well. It also involves some issues with for example managing many projects once at the one local machine. Let’s take a look at the example below to see what problems we met during the Docker implementation inside our company and how we solve them!

Yake - yet another Make clone

blog platform name

Let us invite you to the CLI (command-line interface) world. How often you need an alias for some long commands? How often you need to duplicate some (sometimes the same) bash commands from one project to another? Do the questions sound familiar? It was a huge challenge for us also!